20 Years of Internet Evolution and Technical Success

11/11/2022

By Carlos Martínez, LACNIC CTO

The history of the Internet goes back fifty years, even longer if one considers some earlier foundational work.

This post attempts to review the last twenty years of Internet history. Why twenty? Because LACNIC, the Internet Address Registry for Latin America and the Caribbean, is turning twenty, and “round” birthdays are always a good opportunity to look back.

The Internet’s Technical Success Factors

What is it that makes the Internet a success? What makes the Internet different from other networks? The reason we ask this is that, while other communications networks existed during the 1970s and 1980s and some of them even had a global reach, none of them were as successful or turned out to be as significant as what we now know as the Internet.

The Internet’s Technical Success Factors

1) Scalability supporting growth

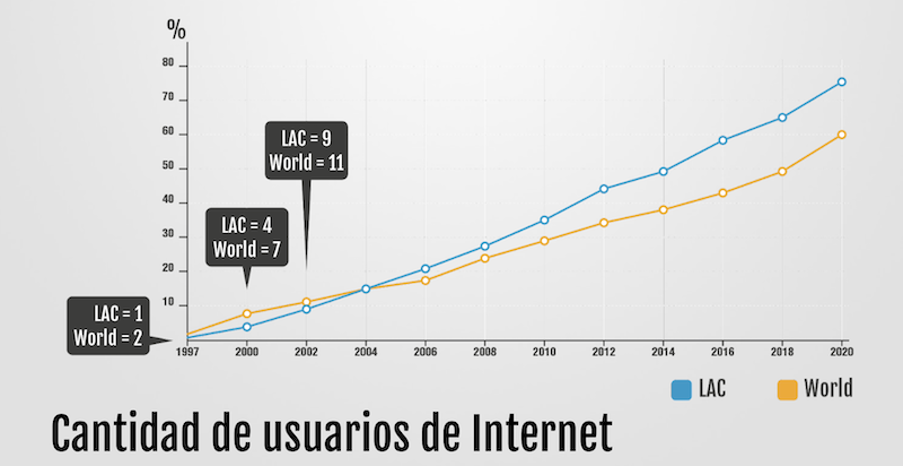

The number of users connected to the Internet has not ceased to grow over these twenty years, even despite two major global economic crises, namely, the dot-com bubble in 2001 and the subprime mortgage crisis in 2008.

In addition to this constant growth, it is interesting to note that the LAC region is growing faster than the global average.

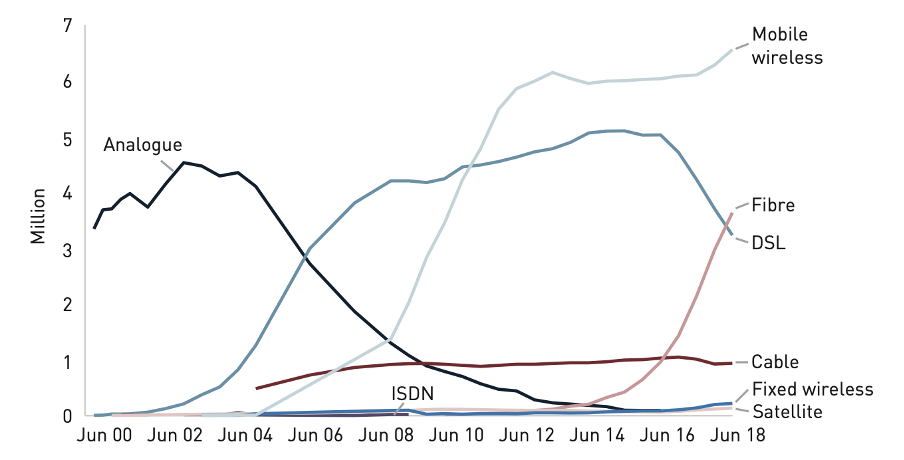

The traffic measured in GBps/TBps and the installed transmission capacity (i.e., how many bits per second we could potentially transmit) show a similar pattern.

The physical technologies (optical, Wi-Fi, cellular data) and protocols used by the Internet allow scaling both the number of individual Internet users as well as its total capacity.

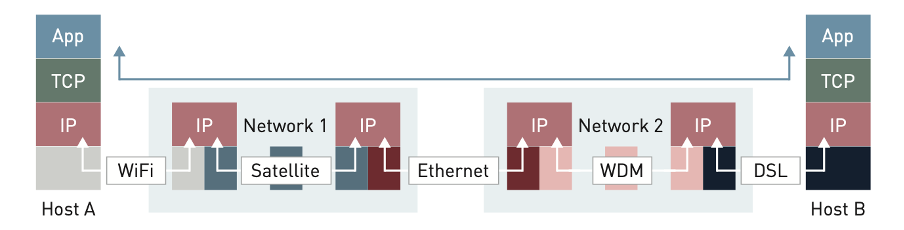

Internet protocols allow freely changing transport technologies, which has made it possible for the Internet Protocol of 1980 to function on optical networks that did not exist at that time.

None of this is accidental but the result of design decisions made during the 1970s and early 1980s.

2) Flexibility in network technologies

The scalability mentioned above would not be possible if the data communication protocols, we employ were not flexible enough to accommodate different ways of connecting users and increasing capacity.

One of the ways in which Internet protocols accomplish this is because they follow two principles:

- Layering

- A network of networks

Under the layering principle, each protocol has a specific function and can be replaced without touching the protocols “above” or “below” it (hence the notion of “layers”).

The further “down” a protocol is, the closer it is to the physical layer; the higher “up” it is, the closer it is to the user.

According to the “network of networks” principle, the Internet is not a single network. Instead, we should think about it as a federation of autonomous networks where each makes their own decisions. This allows an Internet provider to use fiber, another to use LTE, and a third to use a copper network, yet all their users can communicate transparently.

How this federation of networks is interconnected has shown great flexibility. Some networks interconnect privately in what is known as “one to one” or “private peering.” But there are also “Internet exchange points” or “interconnection points” which operate as aggregation sites where multiple networks are present and can choose how and on what terms they will connect with the others.

3) Adaptability to new applications

Why does a person pay for the Internet? Why does everyone want to be on the Internet and why is there even talk about Internet access being considered a “right”?

People use the Internet as a tool for multiple purposes: entertainment, banking, commerce, study, communication.

These uses depend on the applications available on the Internet, and these applications have changed radically. Over the past 20 years, we have moved from an Internet where applications basically involved text and static images to an Internet where e-commerce, video conferencing, and streaming are the most sought-after applications.

Around 1980, applications —or rather the uses of the Internet— were limited to e-mail, setting up remote sessions (time sharing), and file transfer (ftp).

In 2002, there was a much broader range of applications, including the ones above but also the appearance of the WWW, instant messaging applications (IRC, ICQ, MSN, Yahoo, etc.), and the first e-commerce websites. Between 2000 and 2002, there was an explosion of web portals such as Terra, Starmedia, and others.

In 2022, the range of uses and applications on the Internet is too broad to list in this article. I would, however, like to highlight a few, such as videoconferencing, video streaming (YouTube, Netflix, etc.), e-banking, widespread e-commerce, and notably the social media phenomenon in all its magnitude.

What makes this adaptability to new applications possible? The layering model itself is largely responsible for allowing new protocols to be introduced in the higher layers (those closer to the user) without altering the underlying network technologies on which the network operates.

However, there is a more subtle aspect that underlies this, and that is the notion of “permissionless innovation.” Unlike other communications networks, the Internet does not require “asking for permission” to implement a new application. Any and all of us can do it.

4) Resilience in the face of changes in the environment

All this growth and these new users are clearly putting pressure on infrastructure, equipment, and operators.

However, in addition to growth and hand in hand with the increase in the perceived value assigned by society to the transactions that occur online, malicious actors have noticed that there is money to be made through online attacks and criminal activities.

In these last twenty years we have gone through —or are going through— some crises that have been solved thanks to the resilience of the Internet.

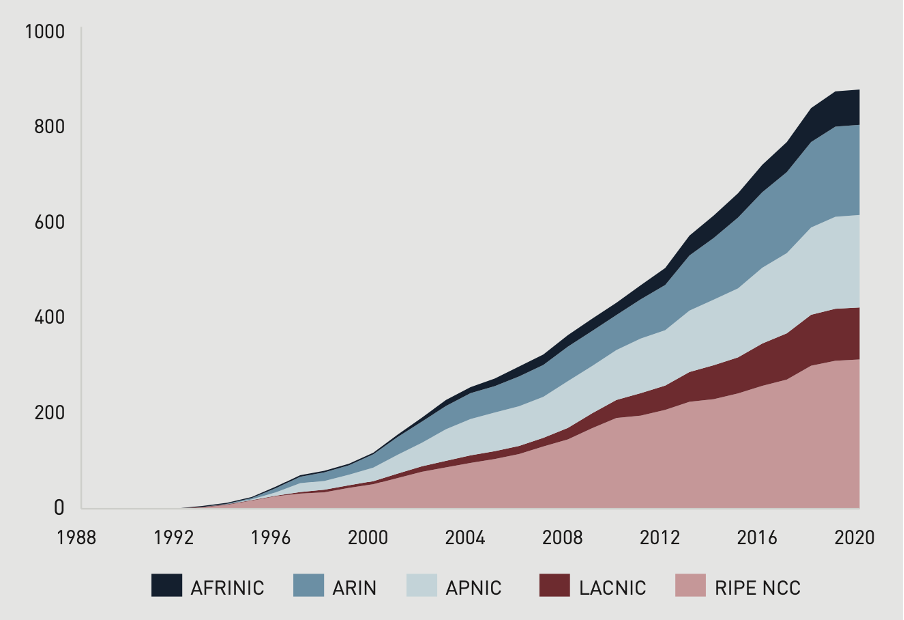

I am referring to the expected collapse of the Internet routing system, which was already being talked about in 1994. Similarly, the collapse of the Internet numbering system, the first mentions of which date back even earlier.

The early 2000s were also witness to some of the most notorious malware attacks such as the ILOVEYOU virus and the SQL Slammer worm, which were followed by the rise of Distributed Denial of Service (DDoS) attacks.

DDoS attacks are particularly harmful when they target Internet infrastructure and, if successful, can affect millions of users.

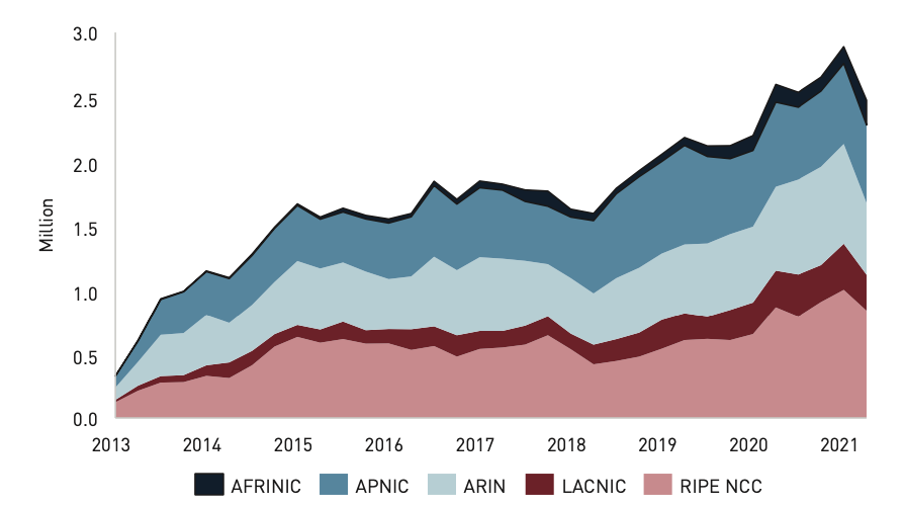

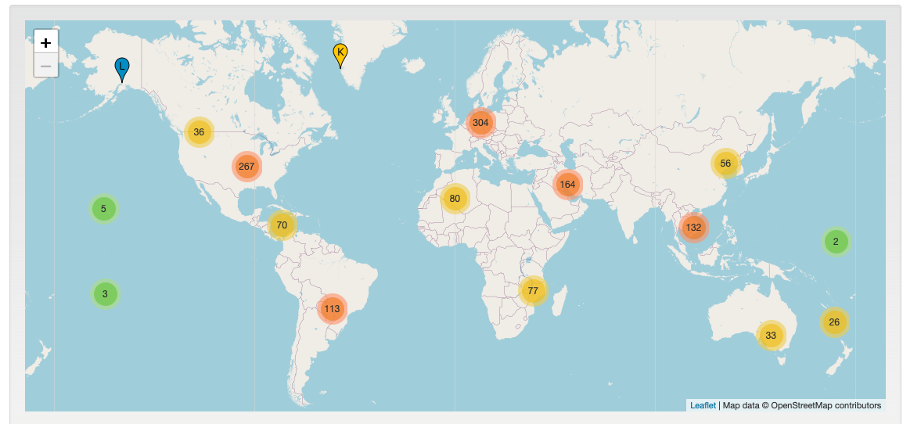

Over the course of these twenty years, the Internet has responded in multiple ways. In the case of DDoS attacks, the way to respond is essentially to increase infrastructure capacity. In addition to the increase in bandwidth mentioned earlier, I would also like to highlight the increase in the number of IXPs and DNS root-server copies.

During this time, work has also been done at the protocol engineering level, including IPv6 (expansion of the numbering space), RPKI (routing system security), and DNSSEC/DoT/DoH (DNS security).

Conclusions

The Internet is a platform that is permanently under construction and renewal. Just as Roman highways or the older streets of any city, its surface is permanently changing, but its spirit and original function are maintained over time.

The views expressed by the authors of this blog are their own and do not necessarily reflect the views of LACNIC.